Fault Tolerant Service Containers

Overview

Fault Tolerant Containers are an innovative approach to improving

service availability and reliability. Externally provided services are

'contained' and therefore gain added fault tolerance. This is achieved

by allowing the container to be configured with a policy which

specifies what kind of fault tolerance mechanisms may be applied to the

services it contains. The container proxies calls to its services,

passing them on to replicas in a pattern determined by the specified

policy. A tool and SDK simplify the creation and deployment of the

container and its policy.

Fault Tolerance

Fault tolerant computing accepts that faults are unavoidable and may

lead to component failure. Well understood mechanisms have therefore

evolved that anticipate component failures but prevent these from

leading to system failure. These fault tolerance mechanisms largely

rely upon the replication of components.

The simplest of all mechanisms is to retry the same component if it

fails. An improvement on this involves executing replicas in parallel

and accepting the first result returned. If one replica fails then one

of the others is still likely to return a valid result. Where state is

involved then more complex schemes may be involved in order to maintain

consistent state across replicas. One mechanism that we have

concentrated upon, which has the advantage of tolerating content

failures, is voting. In its simplest form this involves polling

replicas as to a result and taking the majority decision. This avoids

the problems caused by failures which lead to replicas producing

spurious results.

Architecture

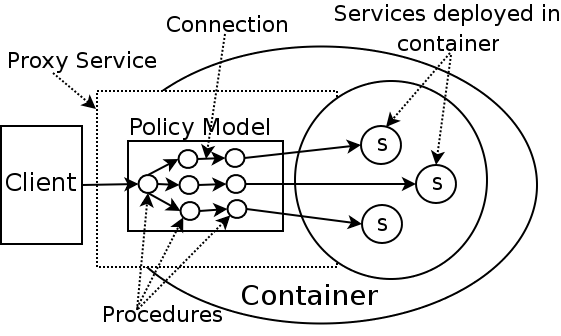

Our architecture is an application of fault tolerance policies to a

set of services via a service container. The container acts as a proxy

to the actual services. Therefore, a message en route to a deployed

service will be intercepted by the container, which adds a set of

domain-independent peripheral services such as fault tolerance. The

interception step is achieved by endpoint displacement; by which the

endpoint of an actual service is replaced

by the endpoint of a proxy service. The actions of the container are

transparent to both the client and service provider.

We apply a policy,

in this case a fault tolerance policy, to each message passing through

the service container walls. To action the policy we intercept the

service call and pass that message through a model representing that

policy. In order for a message to be passed through it We apply a policy,

in this case a fault tolerance policy, to each message passing through

the service container walls. To action the policy we intercept the

service call and pass that message through a model representing that

policy. In order for a message to be passed through it

must be wrapped in a context. The job of wrapping a message is done by

a Listener object. Effectively,a Listener is a Java servlet that is

mapped to a given endpoint.

A context is simply a wrapper for a message. The most commonly used

context is likely to be SOAPMessageContext - used to wrap SOAP

envelopes. Other examples include XML and Stream contexts. The context

is passed throughout a policy model from procedure to procedure through

connections. All message contexts have an interface for creating,

cloning, and storing properties. Sub-classes of MessageContext have

specific methods for dealing with specific problems associated with the

type of message.

The policy model itself consists of an acyclic graph of

procedures. Essentially, we are providing nothing more than a

mechanism by which actions can be applied to service messages. A

procedure implements the actions of the policy model, for example in

the case of a redundancy procedure, it clones a service message and

concurrently redirects the clones down several connections. There are

no limitations on the functionality of the policy model or constituent

procedures, this is entirely extensible. Procedures are linked together

with connections, these constitute the edges of the graph. Each

connection is accessed programmatically from within a procedure but is

deployed externally.

Unlike EJB components, the `contained' services do not actually

reside

inside the container. This is also what distinguishes our service

container from service containers as used in the more generic web

server context. In reality, any of these services could be called

directly without traversing the container.

Representing Policy Models and Procedures

In practice, the information essential to a policy model is

represented in eXtensible Markup Language (XML). This XML model

consists of three compulsory elements: a policy element plus one or

more procedures and connections. The `policy' element is the outermost

element and represents the whole policy model for this proxy service. A

policy element consists of `procedure' sub-elements that map to

procedure objects. Each procedure element indicates the class and

identity of a procedure object. A procedure object is an instantiation

of a Java class that implements a procedure interface. There is a

one-to-one mapping between each procedure element in the policy model

and a procedure sub-class. Each procedure sub-class must expose an

invokable interface. In addition to the invokable interface, each

procedure must have a constructor that takes the corresponding XML

element from the policy model as an argument. The final compulsory

element in the policy model is the `connection' element. This must be

nested inside a procedure element to represent a link to another

procedure element. This link indicates that, after being invoked, the

`parent' procedure will pass the message context to the procedure

indicated in its nested connection. Procedure elements represent the

nodes of a graph whereas the connection elements represent the edges.

Deployment Tool

Containers are deployed as Java web applications. The step of

creating a WAR file containing enough information to function as a

service container is sufficiently complex to require a tool. We have

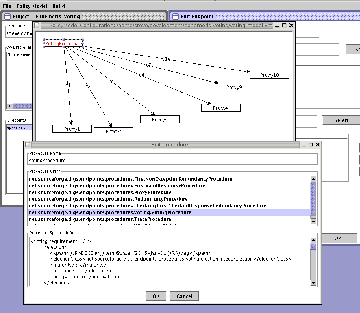

developed such a graphical tool to aid in building and configuring a

fault tolerant service container. The tool represents a bridge between

the code development and container deployment.

A user of the tool begins by creating a project. A project consists

of a collection of jars developed by the user, and series of endpoint

representations. The jars must contain the Listener and Procedure

classes developed in accordance with the SDK. Each endpoint represents

a proxy service to be deployed into the service container. The name of

the endpoint, in addition to that of the project, is used to create its

eventual URL reference.

Each endpoint maps

to precisely one Listener and one policy model. The Listener is

selected from a list of candidates within the imported jar files. A

policy model is chosen using a file selection dialogue. Once chosen the

policy model can be edited rendering the display shown to the right.

Policy models can also be created and edited directly from the main

menu or externally using any XML or text editor. Each endpoint maps

to precisely one Listener and one policy model. The Listener is

selected from a list of candidates within the imported jar files. A

policy model is chosen using a file selection dialogue. Once chosen the

policy model can be edited rendering the display shown to the right.

Policy models can also be created and edited directly from the main

menu or externally using any XML or text editor.

Every policy model is displayed as a directed graph and can be

edited graphically by clicking on nodes or edges. Each node represents

a map to a procedure and each edge a connection between procedures.

Upon selection of a node, it can be edited by right-clicking, requiring

a name, a implementing Java class, and a nest of embedded XML. A list

of available procedure classes are displayed, again these come from the

imported jar files within the project. The name will be reflected in

the graph representation. One and only one procedure is chosen as the

root.

Every single procedure will require different XML to be nested.

There are no limits placed on what that XML is, provided it is

well-formed. No requirements have been lodged for XML schemas or

document type descriptors (DTDs) by the tool or architecture. When

developing a procedure, the XML required must be known as each

procedure must parse its own nested set of XML. The abstract procedure

class knows only how to deal with procedure and connection XML tags. An

example of embedded XML is the voting rules in our ephemeris voting

example.

The policy model editorsaves the policy model in XML format,

embedding custom XML in the appropriate procedure tag. Models will vary

in complexity, but the screenshot shows the model can remain simple

whilst providing complex functionality. There is an obvious tradeoff

between the complexity of the procedures and the model within which

they reside.

Final deployment is invoked by the main menu. This creates a war

file described earlier and places it wherever the user dictates.

Creation of the war file requires the copying of jars and XML model

files, the generation of a web application deployment file, and finally

an archiving process to create the war. If the war is located

correctly, in this case the webapps directory of tomcat server, the

service container will be deployed dynamically and immediately ready

for use. To use, the client must point at one of the endpoints mapping

to each proxy service.

|